Game Developer Conference 2023: Generative AI, MetaHuman, and more

The Game Developers Conference (GDC) 2023, held in San Francisco from March 20-24, wasn’t just about upcoming games and recognizing last year’s best games. The event also showcased how the gaming industry is leveraging new technologies such as generative AI and making in-game characters and environment as realistic as possible.

Here are the key takeaways for the gaming industry from GDC 2023:

MetaHuman

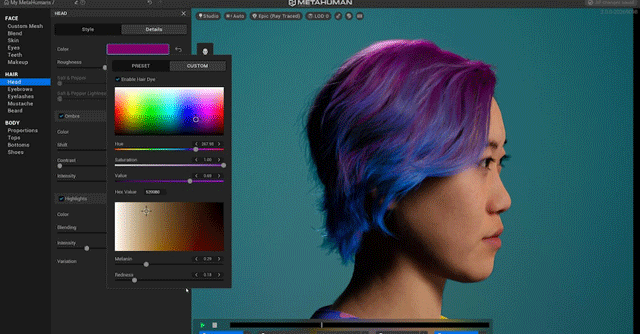

High-quality animation is essential for photorealistic synthetic humans to provide convincing performances, but until now, even the most talented animators have struggled to find the time and resources to devote to this pursuit.

But that's all going to change with the introduction of the MetaHuman Animator. With the help of MetaHuman Animator, one can easily create high-quality animations of facial performances using MetaHuman characters. It takes real-world scans and renders a digital replica in real time on high-end PC hardware equipped with RTX graphics cards. It will soon be available on iPhone.

Generative AI

Unity, a popular game engine creation tool, has teased on Twitter what appears to be the integration of generative AI into its toolkit. On a black background, the teaser displays text that reads, “give me a large-scale area with a melancholy sky,” “add a dozen NPCs,” and “make them flying alien mushrooms.

Unity AI. Here to bring you flying alien mushrooms

— Unity for Games @ #GDC23 (@unitygames) March 22, 2023

Curious? Apply to be a Unity AI Insider: https://t.co/CLHRYylqbP pic.twitter.com/vcFH9qpf1G

The company demonstrated that the tool can simply generate this content for you, a far-fetched idea that could unlock benefits and can make game development much easier.

Since OpenAI released ChatGPT in November, generative AI too has been getting a lot of attention due to the chatbot's ability to generate human-like responses to user questions and the fact that it can write computer code when asked to do so.

Ubisoft also made an AI-related announcement, introducing their Ghostwriter AI tool, which would help save scriptwriters time by generating a rough draft of NPC barks.

Epic Games' Electric Dreams demo took place in a virtual woodland partially generated using the new procedural generation techniques featured in Unreal Engine 5.2.

The new shot creation and scene control features in the Unreal Engine 5.2 Preview can enhance virtual production workflows. For instance, the use of several VCams during production has given directors more leeway in their work.

Making games more realistic

Epic Games also discussed its best-selling game, Fortnite, but the conversation centered on the studio's usage of Lumens and its ability to roll out a substantial visual update without disrupting service.

Since any building in Fortnite can be demolished at any time, dynamic lighting poses certain challenges. At least, that's how it is supposed to work, and the creators actually did implement dynamic lighting that responds to the different forms of player-caused destruction. It was also an opportunity to demonstrate how, even when conditions elsewhere are unplayable due to darkness, the game can be modified for a specific location.