Apple iMessage nudity filter for child safety rolling out to more countries, India not in list yet

Apple’s child safety filter that prevents images or content with any form of nudity from being viewed by underage users is now rolling out to more countries beyond the USA. According to reports on the matter by The Guardian and Apple Insider, the feature is now being expanded to Australia, Canada, New Zealand and UK. However, as of now, India is not in the list of countries where the feature is being enabled by Apple.

The security setting for child sexual abuse material (CSAM) was introduced by Apple in December last year. Falling under communication safety within iMessage, the setting can be manually enabled to have any iPhone registered with an underage user show them prompts if any image sent via Messages bears content with nudity.

The feature, however, garnered a fair amount of controversy when Apple first introduced the CSAM filter back in December. Many had flagged the company for a potentially intrusive technology – especially considering that it sought to deal with compromising imagery. Since then, Apple has said that the feature uses on-device machine learning to detect any content with nudity, as a result of which no sensitive content is sent to Apple servers.

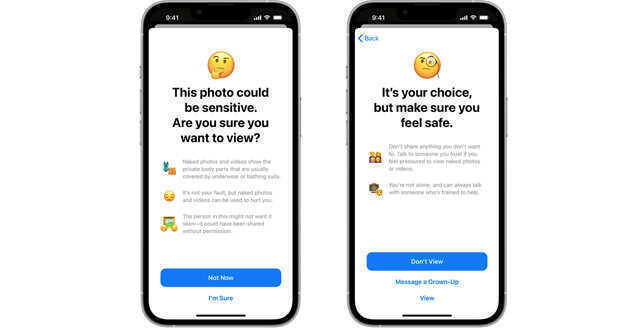

Along with on-device machine learning, Apple combines family-linked Apple IDs and registered age data to decipher the age of the person using their iPhones. For any individual under the age of 13, Apple blocks the image in question and offers options to block the contact sending the photo, and contact their parents or guardians. Users between ages 13 and 18 are offered two prompts that act as roadblocks, which a child will need to surpass in order to view the image.

While Apple has implemented the on-device CSAM filter, it cut back on its original plan to use anonymised hashes, or identifiers, from the database of the National Centre for Missing and Exploited Children – and use this data to match with images within a person’s iOS photo album. While the project sought to crack down on child exploitation and trafficking, its implementation has since been delayed without further explanation.