Nvidia launches new GPU architecture for growing AI workloads in data centres

Nvidia has upgraded to a new architecture for its data centre GPUs. The Ampere architecture launched two years ago is being replaced by a new Hopper architecture that has been designed to handle artificial intelligence (AI) related workloads in data centres faster. The new architecture has been named after US computer scientist Grace Hopper, Nvidia said.

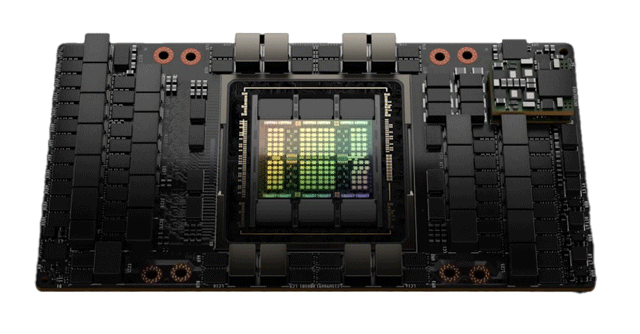

The first GPU based on the Hopper architecture will be the H100, which packs in 80 billion transistors and can be deployed in all sorts of data centres such as on-prem, cloud, hybrid-cloud, and edge. The H100 is expected to be deployed by leading cloud service providers later this year and will be available from Q3 2022. Nvidia claims the H100 is the world’s most advanced chip ever built.

In terms of performance, the H100 GPU can deliver a 30 times faster speed boost on Megatron 530B chatbot, a large generative language model, as compared to its predecessor the Ampere A100. Nvidia said the Hopper GPU uses a new transformer engine that can provide a 6 times boost for training deep learning networks and natural language processing (NLP) models over its predecessor without losing accuracy.

The new architecture also offers better security performance. It uses an improved version of secure multi-instance (MIG) technology that allows a single GPU to be split into seven smaller and isolated instances where multiple jobs can be handled simultaneously in a secure environment.

The other security feature is its confidential computing capabilities that allow the GPU to keep the AI models and data secure during processing. Nvidia believes it can also be applied to federated learning in privacy-sensitive sectors such as BFSI and healthcare as well as shared cloud infrastructures. Federated learning is a branch of AI that allows multiple organisations to collaborate and train AI models without having to share their proprietary datasets with each other.

“Data centres are becoming AI factories — processing and refining mountains of data to produce intelligence,” Jensen Huang, founder, and chief executive officer of Nvidia said in a statement.

Huang points out that the H100 will power the world's AI infrastructure that enterprises use to accelerate their AI-driven businesses.

The Hopper architecture also supports Nvidia’s NVLink interconnect that can be used to connect multiple GPUs to create a massive GPU output. What sets it apart from the predecessor is that it can now be connected to an external NVLink Switch, allowing 256 H100 GPUs to be connected and used as a single GPU with a compute power of up to 192TeraFlops for large-scale AI models that are processing massive amounts of data.

GPUs based on the new architecture can also be paired with Nvidia’s Grace CPUs through an NVLink-C2C interconnect and deliver over 7 times faster communication between the CPU and GPU as compared to PCIe 5.0. The PCIe 5.0 is a serial computer expansion bus standard that transfers data between multiple components at high bandwidth.