IIT Madras’ new phone camera algorithms claim to create 3D depth in videos

A team comprising researchers at the Indian Institute of Technology (IIT) Madras and Northwestern University in Illinois, USA have teamed up to create algorithms, which they claim will help add a layer of 3D depth atop standard video clips recorded through smartphones. These algorithms seemingly help bypass the need for optical elements in the creation of stereoscopic depth – something that is difficult to execute on a smartphone due to the lack of available space for multiple camera lenses.

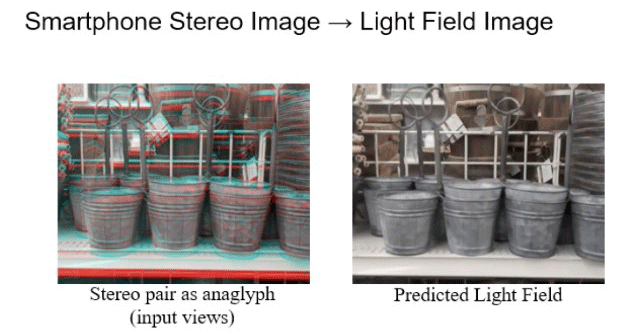

The effect that the algorithms seek to reproduce is what professional depth-recording cameras, also ‘light field’ (LF) cameras or plenoptic cameras, have done over time. The key difference between a regular camera and an LF camera is that while the former can only record the intensity of light within a particular scene, the latter can record both intensity and direction of light.

LF cameras achieve this by placing multiple micro-lenses between the main lens and the image sensor of the cameras in question. It is this directional information that can help the sense of depth in a scene being reproduced – therefore producing a 3D effect.

3D depth recording through smartphone cameras is not new. Smartphone makers have been including 3D time of flight (ToF) sensors to record light direction data and incorporate them into photographs for years now. Furthermore, most phone cameras today offer a ‘portrait’ mode, which attempts to simulate a sense of depth within a photograph by replicating the depth of field that is optically produced by high aperture lenses.

For context, higher the aperture of a lens (i.e. lower the F-number), the shallower is the depth of field – the area that remains in focus at the highest aperture of the lens. Such shallow depth can produce artistic blurs due to the optical properties of the lenses, thereby adding a sensation of depth to the photographs.

In terms of videography too, smartphone makers have attempted to include software-driven bokeh video modes. However, the latter have largely been algorithm-based background blurring – rather than actually rendering a 3D plane for videos.

In the latest paper authored by Kaushik Mitra and Prasan Shedligeri of IIT Madras alongside three other researchers from Northwestern University, the researchers have stated that due to a lack of space to accommodate micro lenses in between the main lens and the image sensor of the typical smartphone camera, such depth creation in videography has been difficult.

In order to achieve this, the researchers first recorded the same video as a stereo pair through two adjacent cameras on a smartphone. This could be achieved since most mainstream phone cameras today offer at least a dual-camera setup. The stereo pair of videos are then converted into a 7x7 camera array, therefore mimicking a setup similar to the micro lens arrangement of a plenoptic camera – or a multi-camera array that can be used for stereoscopic 3D video recording with professional setups.

The algorithms in question, which use machine learning techniques, are then implemented to this 7x7 array of the stereo videos. A timeline of temporal continuity is then established by these algorithms, therefore aligning the array in sync with the timecode of the video. This entire process helps create a light field, bringing directional light information into the video being processed.

“A crucial advantage of the algorithm developed by our team is that it eliminates the need for fancy equipment or arrays of lenses to capture videos with depth. The bokeh and other such aesthetic 3D effects can be achieved with a smartphone that is equipped with a dual-camera system. In addition to providing depth, our algorithm enables us to view the same video from not just one point of view, but from any of the 7x7 grid of viewpoints,” said Mitra.

The results of this project were published in the progress report of the Institute of Electrical and Electronics Engineers (IEEE) and the Computer Vision Foundation (CVF)’s International Conference on Computer Vision, 2021.