Meta's ReSkin could help robots feel human-like touch sensations

Earlier today, Meta, formerly Facebook, announced ReSkin, its new, open source ‘skin’ in partnership with Carnegie Mellon University – that claims to have cracked the formula behind producing efficient, accurate and effective touch sensors and simulators. ReSkin, if realised in its claimed potential, can prove to be a key proponent of robotic development as it uses an unsupervised machine learning algorithm, meaning it doesn't need classified and labeled data to recognise patterns. The latter, the company has claimed, will help to automatically recalibrate the touch sensors in its system – hence being a practical solution for long term usage.

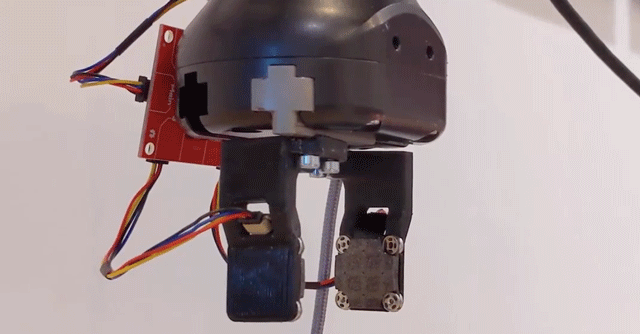

The sensor behind ReSkin is called Digit, which Facebook has so far detailed as a “high resolution, low cost, compact” tactile sensor. Thanks to the its dimension benefits, the sensors can apparently be mounted on fingertips of humanoid robots. The sensor has an elastomer gel surface inlaid with RGB LEDs, which was designed using what the company says is a “silicon and acrylic” manufacturing process – something that has apparently helped it achieve a balance between sensitivity and ruggedness. The entire process behind ‘Digit’ has been open sourced, and Facebook said today in a statement that it will be made commercially in partnership with GelSight.

How will ReSkin be beneficial?

In a blog post announcing the development, Facebook research manager Abhinav Gupta and fellow Tess Hellebrekers wrote, “AI today effectively incorporates senses like vision and sound, but touch remains an ongoing challenge. That’s in part due to limited access to tactile sensing data in the wild. As a result, AI researchers hoping to incorporate touch into their models struggle to exploit richness and redundancy from touch sensing the way people do.”

“ReSkin is inexpensive to produce, costing less than $6 each at 100 units and even less at larger quantities. It’s 2-3mm thick and can be used for more than 50,000 interactions, while also having a high temporal resolution of up to 400Hz and a spatial resolution of 1mm with 90 percent accuracy," the researchers added. These specifications, they claim, will make it "ideal" for various robotic hands, tactile gloves, arm sleeves and even dog shoes. The more the use-cases, the more data they can collect more data about touch interactions for their AI models.

"ReSkin can also provide high-frequency three-axis tactile signals for fast manipulation tasks like slipping, throwing, catching, and clapping. And when it wears out, it can be easily stripped off and replaced," they said.

In simpler words, this version of the synthetic skin expands the scope of getting robots to carry out touch sensitive operations in applications that are difficult or hazardous, but require sensitive touch. For instance, applications that involve interaction with harmful chemicals, which may range from industrial manufacturing processes to automated geological explorations, may make the most of a product such as ReSkin.

How ReSkin works

ReSkin uses magnetic proximity sensors to collate its data, which helped the researchers circumvent the need for electronic sensory conductors between a surface and, say, a robotic arm equipped with a touch sensor. This, the researchers claim, has helped them to better simulate the function of human touch, which uses sensory conduction between a surface, the skin and the nerves underneath the skin to formulate a response to various forms of touch. This structure is combined with multiple sensor data collection, which helps put together a sensor that’s easy to attach and detach.

The post further adds, “We also exploited advances in self-supervised learning to fine-tune sensors automatically using small amounts of unlabelled data. We found that a self-supervised model performed significantly better than those that weren’t; instead of providing ground-truth force labels, we can use relative positions of unlabelled data to help fine-tune the sensor’s calibration.”

In essence, ReSkin can work to become better on its own, without the need for a connected framework of supervised AI algorithms interacting with them. This, the researchers state, is the first page in the newly christened Meta’s work around AI touch sensing and calibration. “We’re announcing an open-source ecosystem for touch processing that includes high-resolution tactile hardware (DIGIT), simulators (TACTO), benchmarks (PyTouch), and data sets,” adds the post – underlining the future of robotic touch simulation that Facebook aims to work on. Will this be a part of the larger ‘metaverse’?