Pichai’s enthusiasm translates to remarkable reality as AI suffuses Google’s show, products

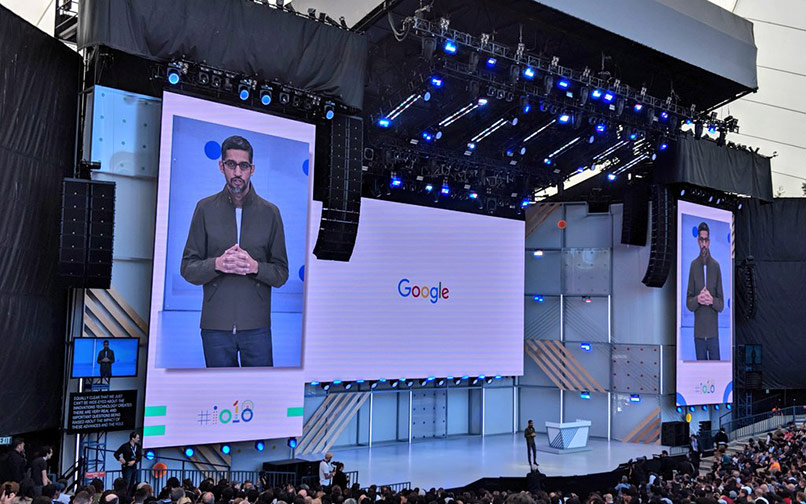

If you thought that Google CEO Sundar Pichai was exaggeratedly enthusiastic about artificial intelligence (AI) at Davos this year, sounding like a gushing teenaged school girl excited for her first heartfelt romance -- well, think again.

Why? Because this time around, Pichai, at his company's annual developer conference called Google I/O, showed that he had put his digitally robust words into notable action. The internet giant, Pichai showcased, had made actual impressive strides in AI by integrating improved AI engines into most of its products including the Assistant, Gmail, Maps, Photos, Android and Waymo -- the company's autonomous driving unit.

Delivering his keynote address at Google I/O, Pichai first rolled out all major improvements the company had made in vision learning and AI since the last year's conference. Having said that, he moved on to the company's use of AI and machine learning in healthcare.

Watch the keynote address here.

Healthcare

The CEO said that Google has been driving efforts to develop non-invasive ways to check for cardiovascular diseases and detect diabetic retinopathy in patients. In February, the company's AI research team had said they were using Deep Learning techniques on one of its computer vision engines to try and assess cardiovascular risk factors.

At the developer conference, Pichai added that a new healthcare programme was soon to be launched in partnership with hospitals, with the existing projects running in India’s eye speciality hospitals. Rival Microsoft has also been making rapid AI strides in the healthcare sector. In India, the Satya Nadella-led company has partnered Apollo Hospitals for early detection of heart-related diseases. The company has also launched a five-year $25 million AI programme that will help developers who are working on AI programs for differently-abled people.

The CEO, elaborating further on Google's healthcare efforts, said the company had developed a new way for differently-abled people to communicate in Morse code using AI-driven keyboard (Gboard) that auto-corrects eight billion words per day.

With Morse code and machine learning working together to support assistive text entry, we've added a new and accessible way to input communication on your @Android phone with the Morse code keyboard—now available in #Gboard beta. #io18 pic.twitter.com/P0HcTeFcyC

— Google (@Google) 8 May 2018

Before moving to new AI features in Gmail, Pichai also hinted at the company's progress in natural language processing and natural language sounds. He showcased an example of how AI running on its WaveNet technology can be used to help audiences catch TV debates better, especially those where one person ends up talking over the other.

In January this year, the internet giant had said it had developed a text-to-speech artificial intelligence system, called Tacotron 2, that can speak in a very human-like voice.

In March, the company had said that it had figured out a way to make AI-generated voice much more natural using WaveNet technology, which generates voices by modelling audio waveforms on samples of human speech, as well as previously generated audio. Siri or Cortana, on the other hand, reply to the user with actual recordings of a human voice, rearranged and combined.

Gmail

A new feature powered by AI, we're launching Smart Compose in @gmail to help you draft emails more quickly by providing interactive suggestions as you type → https://t.co/nJIWG29M0T #io18 pic.twitter.com/DKjqDdl5ts

— Google (@Google) May 8, 2018

Talking about the new Gmail feature, Pichai said the firm would roll out Smart Compose later this month. Described as an extension to the Smart Reply feature, Smart Compose can start showing suggestions in order to complete sentences and can propose alternative messages using a contextual engine.

Google Photos

It's easier than ever to take action on your pictures in @googlephotos. Rolling out today, you may start to see suggestions to brighten, archive, share, or rotate your photos, right on the image. #io18 pic.twitter.com/NPT0l0GuBy

— Google (@Google) 8 May 2018

The CEO then moved onto the company's use of AI in Google Photos. The AI in the app gives out photo-editing suggestions, he said, which are context-driven.

Hardware

Today we're announcing our third generation of TPUs. Our latest liquid-cooled TPU Pod is more than 8X more powerful than last year's, delivering more than 100 petaflops of ML hardware acceleration. #io18 pic.twitter.com/m8OH5vFw4g

— Google (@Google) 8 May 2018

Moving onto hardware, Pichai showcased the third-generation of its Tensor Processing Unit (TPU) chips. Google had developed these chips in the middle of last year to help customers run hyper-scale computing projects on its servers (via cloud-based services) and has been renting out the chips since February. The CEO said the new third-generation TPUs were eight times faster than the last generation and so powerful that the internet giant had to introduce liquid cooling on its servers.

Rival Microsoft has a very different take on chips such as TPUs. It believes that since machine-learning models are changing so fast, the TPUs would soon get obsolete and the money spent on developing them would go waste. Hence, the Redmond-headquartered company has started using chips that can be custom-configured.

Improvements in Google Assistant

"One of the biggest areas we're tackling with AI is the #GoogleAssistant...Our vision for the perfect assistant is that it would be natural and comfortable to talk to, and there at the right moment in the right way when you need it." –@sundarpichai #io18

— Google (@Google) 8 May 2018

The CEO then moved on to improvements in Google Assistant on devices such as smartphones and Google Home and gave the stage to Google Assistant vice-president Scott Huffman. Huffman said that Google Assistant, rolled out just three years back, can now be accessed on 500 million devices across 30 languages in 80 countries. He added that the Assistant is also available in over 40 car brands and 5,000 connected devices.

In addition, Huffman said that the Assistant has been improved in a way that the user doesn't have to say 'Hey Google' or 'OK Google' every time before talking to it. The Assistant is now capable of back-and-forth conversation with the user, he said, adding that the Assistant had played nearly 130,000 hours of storytelling for children in the last three months.

Saying that Google was aware of concerns about children interacting with Google Home becoming more demanding, the company said it had introduced a 'pretty please' feature for kids.

The company also unveiled a new device, called smart displays, where the Assistant can provide visual and voice assistance to users. It also said that the Assistant experience had been made richer for smartphones and Google Home.

Explaining other features of the virtual assistant, the internet giant said that the Assistant would soon be accessible in the navigation menu in Google Maps so that drivers don't have to touch or change screens. In addition, the Assistant has also been upgraded with abilities to help users order from popular food chains. Currently, the company has forged partnerships with Starbucks, Domino’s, Dunkin’ Donuts and others.

#GoogleIO @Google @sundarpichai unveils Google Duplex — the NLS feature of Assistant that can make seamless conversations @TechCircleIndia ... pic.twitter.com/sI7pJIbPB6

— Anirban Ghoshal (@journoanirban) 8 May 2018

Taking over from the executive, Pichai showcased perhaps the most impressive and intriguing feature of the Assistant: The ability to make phone calls on behalf of the user and talk to people on his/her behalf. This also showcases the prowess of the company's natural language processing abilities. Pichai showed examples of the Assistant talking to a barber for an appointment and a restaurant’s manager for booking a table. The Assistant sounding like an absolute real person was notably impressive at the developer conference. Pichai said that though the project was far from being released, it was already running an experiment with small and medium businesses to help them avoid unnecessary calls.

Explaining further, Pichai said that since a lot of people end up calling places to see if they are open, the firm is trying to deploy the Assistant to take those calls and answer those queries. "We want to connect users to businesses in a good way. Sixty per cent of businesses don't have an online booking system setup," he said, adding that the Assistant would be available in four new voices.

Google News

Today we're rolling out an all-new @GoogleNews—using the best of AI to organize what’s happening in the world to help you learn more about the stories that matter to you → https://t.co/z8234zDXU1 #io18 pic.twitter.com/6QkX6x6OiD

— Google (@Google) 8 May 2018

Announcement of an entirely new Google News app driven by AI was made at the conference. According to the company, the new News app will use a new machine-learning technique called reinforcement learning to study user behaviour and post relevant information via the app.

Android operating system P

With #AndroidP enjoy smart features like adaptive battery and brightness, a simplified @Android experience, and more ways for your device to adjust to you → https://t.co/4hHcHZCBAq #io18 pic.twitter.com/EZBWYoUJHO

— Google (@Google) 8 May 2018

Moving on to AI-driven features and improvements in the next version of Android operating system P, which is in beta mode, Pichai said the firm has introduced adaptive battery, adaptive brightness, Slices and App Actions.

Explaining in detail, Pichai said that the new operating system will come with machine-learning algorithms that will tweak battery and brightness settings of the smartphone based on user behaviour. The company said that the battery management feature will include monitoring background apps as well.

Talking about Slices, the company said the feature is expected to provide secondary information that will help users make relevant and better choices. For example, if a user searches for an app, then Slices will throw up relevant information about the app and its utility features. Google showcased the example of Lyft, where it showed that as soon as the user searched for ride-hailing company Lyft, Google threw up information such as ride fare, time to work, etc.

The company also showcased a new feature called App Actions. This feature predicts relevant use cases to users by reading their actions. Interestingly, the company also said that it was releasing MLKit for Android P -- a machine learning development tool for developers because of the dearth of machine-learning engineers.

Google Maps

Giving you the confidence to explore and experience everything from new places in your local neighborhood to the far flung corners of the world, see more of what's coming to @googlemaps later this summer → https://t.co/6tSsWeDak8 #io18 pic.twitter.com/6swWaesjob

— Google (@Google) 8 May 2018

Describing Maps as smarter, Google said that it could now add new addresses to different locations (unmapped rural areas around the world) using AI and help people find parking space along with traffic updates. In addition, Maps will also come with a new Explore tab informing users about events and new activities in their area of interest. 'For You' tab will let users follow areas or restaurants so that they don't miss out on any updates.

Like your own expert sidekick to help you quickly assess options and confidently make a decision, we've created "Your match" on @googlemaps—a number that suggests how likely you are to enjoy a place based on your unique preferences. #io18 pic.twitter.com/lzDCt19Nnd

— Google (@Google) 8 May 2018

The internet giant also said that it had devised a new feature called 'Your Match' that is expected to act as your wingman when you want to try out new experiences such as visiting a new restaurant. Once the user taps on any food or drink venue, the feature will display your “match”—a number that suggests how likely you are to enjoy a place. Google said that it has used machine learning to generate this number, based on a few factors such as -- the food and drink preferences you’ve selected in Google Maps, places you’ve been to, and whether you’ve rated a restaurant or added it to a list. "Your matches change as your own tastes and preferences evolve over time—it’s like your own expert sidekick, helping you quickly assess your options and confidently make a decision," it said.

In another update to Maps, Google said it was adding a new feature that would take the help of the smartphone camera along with Google StreetView to help users navigate better. Explaining the new tool, a top executive from Google said that users have found it difficult to navigate using Maps while walking, because of suggestions such as 'head south' which give a person no idea where south is. To help orient the user, Maps can now use the camera to study buildings and show where south is.

Google Lens

From traffic signs to business cards to restaurant menus, words are everywhere. Now, with smart text selection, Google Lens can connect the words you see with the answers and actions you need. #io18 pic.twitter.com/NB4Ima4is4

— Google (@Google) 8 May 2018

The internet giant had introduced Google Lens last year in Photos and Assistant. Lens uses AI and vision-learning algorithms to answer questions about different objects when the camera is pointed towards them. This time, Google has announced major updates to Lens. First up is smart text selection -- a feature that connects the words the user sees with the answers and actions you need. Users can now copy and paste text from the real world—like recipes, gift card codes, or Wi-Fi passwords—to your phone. "Lens helps you make sense of a page of words by showing you relevant information and photos. Say you’re at a restaurant and see the name of a dish you don’t recognise—Lens will show you a picture to give you a better idea," Google explained, adding that it required not just recognising shapes of letters, but also the meaning and context surrounding the words. This is where all its years of language understanding in Search helped.

In a few weeks, a new Google Lens feature called style match will help you look up visually similar furniture and clothing, so you can find a look you like. #io18 pic.twitter.com/mH4HFFZLwH

— Google (@Google) 8 May 2018

Next up is style match. Point Lens towards an object that catches your eye and it will provide not only relevant information about it but also match it with other products and give the chance to actually purchase it, if available. Interestingly, the company said that Lens was now working in real time. This means Google is making use of its neural networks, TPUs and in-device intelligence to make that possible. "Now you’ll be able to browse the world around you, just by pointing your camera. This is only possible with state-of-the-art machine learning, using both on-device intelligence and cloud TPUs, to identify billions of words, phrases, places, and things in a split second," it said.

And Lens will soon work in real time. By proactively surfacing results and anchoring them to the things you see, you'll be able to browse the world around you, just by pointing your camera → https://t.co/A1nUSk8zsK #io18 pic.twitter.com/0xNI4dZez8

— Google (@Google) 8 May 2018

Watch the 2017 Google I/O keynote address.